This is the article version of my talk from BSides Edmonton & Calgary 2023. While the actual presentation is probably better, this one can serve as a decent overview.

Some Background

My name is Damien, and I’m a Security Analyst at an Edmonton & Calgary-based MSP. We’re an SMB that serves SMBs, which is important to keep in mind while reading this article. I have a diploma in IT Systems Administration and a Post-Diploma Certificate in Cybersecurity. I’m also an ISC member, with my CC. Super impressive, I know.

This article will follow the general path that my talk did, meaning this is what you’ll read about today:

- The incident that this talk/article got its name from.

- MITRE

- IOC & malicious activity for BEC

- A whole bunch of ugly graphs

- Controls

- Some preachy stuff

I’ll include inline links to various things during the article. Oh, and just because I use the acronym a lot, BEC = Business Email Compromise.

Ready? Let’s go!

The Million Dollar CEO Fraud

So, I should preface this by saying that this is not a hypothetical incident that I made up for this talk. This is a real incident that I discovered and handled, as unbelievable as that seems to some people. I have anonymized this company, so I won’t be saying its name, or mentioning details like its industry or size. I also should mention that when you look up what people actually call CEO Fraud, this is not it. But, it sounded cool, and it’s technically a CEO’s account doing fraud, so I figured I could get away with it.

Act 1: Discovery

January 10th, 2023, was a day like any other. I was doing an audit, specifically, I was doing an audit for a customer, which I’ll call Company X. Part of my job is to do security audits for our customers, based on CIS benchmarks. Now to be completely honest, I find audits to be one of the most boring and tedious parts of my job, but someone has to do it. I was working through a backlog that I occasionally let build up, so I’d been spending the last few days primarily doing audits. With that in mind, can you blame me for going sniffing around for trouble? I mean, an audit is a great time to do a threat hunt, and checking for a few indicators of compromise wouldn’t take me that long.

So, I checked the most common IOC within Microsoft 365, which is what Company X uses as their productivity suite. This IOC is suspicious sign-ins, which you can export from the All-User sign-in logs in Azure/Entra. I did so, then opened the export in Excel and started filtering out records from known good IP addresses and locations. After I did this, I was left with one account that had several strange locations in their sign-in log. The logs showed successful sign-ins from Edmonton and Los Angeles within hours, among other suspicious locations. When I used an IP lookup tool on the suspicious IP addresses, they matched known VPN or Proxy servers, among other things.

Now, Company X has an internal IT-type person, so I sent him the sign-in logs for the certain user and asked him if this was expected behavior. He got back to me very quickly and confirmed that many of the sign-ins were not legitimate, and asked if I could provide a record of file access. Absolutely. Obviously, we also secured the account.

Perfect, something other than an audit! (Famous last words…)

Next, I needed to complete my RCA. It’s basically a document I put together where I detail the incident from discovery, remediation, the why it happened, but most importantly, what happened during the incident.

Act 2: Investigation

It wasn’t until I had begun my investigation that I realized that the affected user was the CEO of Company X. I was reviewing the file access records when I noticed a lot of access from the Executive SharePoint site, which prompted me to check the user’s job title in Azure. You can imagine that it immediately raised the stakes on everything quite a bit.

So, How did I do my investigation, and what did I discover?

First, I requested a 90-day inbound and outbound message trace for the compromised account, giving me just over 3k results. Then I started the export for 90 days of user activity from the Unified Audit Log, or UAL. The UAL gave me 17k records, which isn’t a lot to me now, but at this time was more than I’d ever gotten. Keep in mind, I had only done perhaps a dozen BEC investigations up to this point.

I knew that I needed something to make the investigation easier, so after some research, I started using the HAWK forensics tool to investigate Microsoft 365 business email compromise. HAWK provided me with several more logs, including a more granular mailbox audit log with specific email access, all nice and cleaned up, containing just over 11k records.

HAWK also provided what is my favorite feature it has, which is a converted authentication log that uses an IP address lookup API to match authentication activity from the audits to city and country. This gave me 875 unique IPs, so I’m glad I didn’t need to do that all manually. I also grabbed some other logs, such as the user audit in Azure. Eventually, I also eDiscovered some emails.

Anyone who’s investigated an email compromise knows the next steps. A tedious review of the audit logs is needed, where you separate records from known good IP addresses and suspicious IP addresses. You do the same thing with the message trace and exchange audit log, basically anything you can categorize.

So, now I have a pile of information in front of me, just like a puzzle, and it’s time to put everything together.

Timeline

This is the incident timeline. I’m going to go in chronological order, from the likely initial access on November 7th to the date of discovery on January 10th/11th.

November 7th

After some investigation, I determined that November 7th, 2022 was the likely date of initial access, although I had records going back to October 12th. This was the first suspicious sign-in on the account, and there was also a defender alert about a malicious URL click for the CEO’s account that had been triggered on this date. What this means, is that it’s likely the CEO fell victim to a phishing email, which at the time was not caught by the spam filter. Later, Microsoft realized that the URL was malicious and sent an alert about it. Unfortunately, it was too late.

November 11th

The next account access was on November 11th, when many emails and files specifically about Company X’s dealings with a financial company were accessed. Obviously, our threat actor had a plan, because they created an inbox rule to redirect all emails from the financial company to the RSS Subscriptions folder. Classic.

Reconnaissance

I classify this time period of November 12th to November 29th as reconnaissance, as there were several sign-ins with various emails and files accessed. These were things such as internal documents, templates, contracts, and many more emails and files to do with Company X’s business with the target financial company.

November 30th

On this date, things start to pick up. The threat actor emails the finance company with a request to add a new authorized signer to the account, asking what information is needed. The finance company responds with a list of personal data needed and some documents.

December 2nd

The threat actor responds with the information and signed documents. It’s on this email that the threat actor CCs the supposed new treasurer, who is using an email address with a custom domain name that would be the type of thing an independent CPA would use, which is what they were claiming this new treasurer was.

December 5th

The finance company responds with several more documents that need to be signed for the new signer to be added.

December 7th

The threat actor provides signed copies of the documents, but the finance company sees a problem with one of them, and it needs to be resigned.

December 12th

The threat actor sends the resigned documents. It’s also around this time that the threat actor registers another custom domain, this time a copycat or typosquat of Company X’s main domain. They add this domain and the fake CPA domain to the original inbox rule. From this point on, the threat actor communicates exclusively using the copycat domain.

Bureaucracy

After this, there’s a lot of back and forth, primarily while the financial company asks some clarifying questions such as “Is so-and-so still going to be a signer?”, things like that. The threat actor is also impatiently emailing the financial company every few days, asking when the new treasurer is going to be added.

December 21st

The threat actor emails once again asking for an update. The finance company advises that the National Bank Independent Network (NBIN), has gotten involved, which is like an overseer for portfolio and investment management firms. They request more information, which is provided.

December 23rd

The threat actor’s fake treasurer identity (actually a stolen identity belonging to a retiree from Quebec) is added to the financial account as an authorized signer.

Act 3: Unraveling

I should mention that at this point, all communications about this have been via email. At no point did anyone from the finance company pick up the phone and call the CEO or any other signers on the account to confirm. They didn’t even CC them in the email.

January 4th

The threat actor took a break for Christmas and New Year’s, it seems, because the next activity was on January 4th, when the threat actor started the process of sending a wire transfer with the CEO’s account, advising them to coordinate with the fake treasurer, and asking what information would be required. After some back and forth, the threat actor initiated a wire transfer to a bank account in Hong Kong, for $710,000 USD, which at the time was roughly $950,000 CAD.

January 6th

The bank emails the threat actor to advise that the transfer was finalized. Since the transfer was in USD, they had to ‘raise the cash’ since the account that was being transferred from was in CAD.

January 9th

The threat actor emails the financial company advising that the wire was not received. The financial company confirms there was an error, resends the transfer, and this time it successfully goes through.

January 10th

This was the date that I discovered the compromise, by complete accident. I wasn’t able to complete my investigation on this date, but I had exported and cleaned most of the logs.

January 11th

I continued my investigation at approx. 11:50 a.m. MST, according to my ticket notes. Meanwhile, the threat actor had initiated a second wire transfer at 10:36 a.m. MST, to a different bank account in Hong Kong, this time for 1.3 Million CAD.

I discovered the January 4th wire transfer first. I knew that one had already gone through, so I recorded it and resolved to finish investigating up to the current day before I called the Internal IT person. Not long after, I discovered that a second wire transfer had been sent, at this point, two hours earlier. Was there still time to stop this? I called Internal IT who just happened to be in the CEO’s office. He put me on speaker phone, and I asked the CEO if he was aware that two wire transfers had been made from Company X’s account to a bank in Hong Kong, one of those transfers roughly 2 hours ago.

He obviously was not.

With as much professional urgency in my voice as I could muster, I told him to call the finance company and cancel the transfer, which he did.

Epilogue

After this, I continued on.

I completed my investigation and put together an incident RCA document. I sent the RCA document and all of the logs and exported emails to the Internal IT, and eventually law enforcement as well.

Now the good news is that the financial company refunded Company X for the money that was stolen. Weeks after the incident I got more information from Company X’s account manager at Accurate, who told me that the financial company didn’t follow their internal process for adding authorized signers, nor making large fund transfers. Additionally, anyone who actually looked at Company X’s records would have seen that the transfer to Hong Kong was unprecedented. I cannot say what Company X does or what industry they are in, but I can assure you that there is no reason for them to be doing business with Hong Kong.

Who hacked Company X? I have no idea, and I don’t think I’ll ever know. I’m not certain if it was an APT, but this was not a simple heist, like changing an employee’s banking details. The documents for adding the signer and doing the transfer looked complicated, and I would need at least several hours of research to understand them enough to fill them out. Whoever did this has good knowledge of the banking and financial systems, specifically within Canada. They knew the lingo, they knew to wait for the New Year, as sending a wire transfer on December 26th would have looked suspicious. This was not someone working alone. But like I said, we’ll probably never know. All we can do is learn from this and improve our security posture so that we don’t become this threat actor’s next victim.

MITRE ATT&CK

By now you should be aware of MITRE ATT&CK, which is a framework for classifying malicious activity around an incident. It was originally developed for use in APT (advanced persistent threat) caused incidents, but anyone can use it. Using the Matrix, we can view specific techniques or tactics within categories, as well as suggested Mitigations. We can also use the navigator to visualize an incident.

Within the miter matrices, There is specifically a Cloud matrix, which contains techniques from general SaaS and IaaS incidents, as well as from Office365, Google Workspace, and AzureAD. I’ve found that unfortunately it’s not as detailed as the other matrices, so I hope it might be improved in the future. I’m only going to go over the specific categories with techniques relevant to our incident, but I recommend anyone who’s never heard of MITRE ATT&CK to check it out and play around

Initial Access

First, we’ll begin with initial access. This was via a phishing link, because as I mentioned earlier, I actually found a Defender alert buried in the global administrator mailbox that was missed, alerting that the CEO had clicked on a malicious link. This was the same day I determined was likely the date of initial access.

Since this was an Office365 account, We’d classify this as a valid account, sub-technique cloud account.

Persistence

Next, we’ll look at persistence. MFA was enabled on the account, but it was bypassed, either by AitM or another bypass method, I cant conclusively say. Once they were in the account, the threat actor added an additional MFA method, for persistence. The user’s original MFA method was text (not phishing resistant) and the threat actor added a generic software TOTP.

Defense Evasion

Under defense evasion we have the technique hide artifacts, and the sub-technique email hiding rules, also known as filters. We also have the technique indicator removal and the sub-technique clear mailbox data. Emails were frequently deleted by the threat actor, but thankfully I could recover them.

Collection

Finally, under Collection, we have the technique of collecting data from information repositories, specifically SharePoint. SharePoint and OneDrive were where a large amount of sensitive company data was accessed from, which was used during the fraud. We also have the technique of email collection, specifically remote email collection. I have record of email access, and it is likely that emails and their attachments were exfiltrated at various times.

IOC & Malicious Activity

Next, I’m going to talk about indicators of compromise (IOC) and other malicious activity around BEC further. While I’ll cover controls in the next section, I’m going to put recommended controls beneath each IOC/Activity, just to make things easy.

Authentication Irregularities

Overwhelmingly, the most common IOC for BEC is impossible travel or other suspicious authentication locations or properties. When a user who only accesses from Edmonton suddenly starts using a sketchy proxy server from China, or a user who only uses a specific Windows device suddenly starts using Linux, you know something strange is happening.

Suggested controls: Authentication controls, alerting.

Forwarding Changes & Mail Rules/Filters

Forwarding changes and email rules/filters are one of the most common forms of malicious activity during a BEC. Rules/Filters that either redirect certain senders or subjects to a folder/apply a specific label, or rules/filters that just delete all incoming emails are very common.

Suggested control: Alerting with or without automatic remediation.

Spam Email Sending

Phishing emails have to spread in some way, right? Compromised accounts are frequently used to send spam emails, generally containing phishing links. An account will send spam under two circumstances:

- It never had any value to a threat actor, meaning the compromised account does not have the access, permissions, or role that the threat actor wants.

- The account has fulfilled its purpose, or the threat actor believes they might be caught soon, so they ‘burn’ the account by sending spam.

Suggested control: Message limits. This is available in Exchange Online as an option under the Outbound Anti-Spam Policy. Gmail already applies message limits, and I wasn’t able to find an option for an Admin to set one.

Unexpected Inbox Activity

Emails unexpectedly being read, or emails being deleted from certain folders, the trash, or the sent emails is another IOC.

Suggested control: User awareness training.

Sensitive Access

Often when an account is accessed, you will see records for email and file access around sensitive information. Email compromise tools used by hackers often have the ability to automatically search the inbox and cloud file storage for specific keywords, such as password, invoice, payment, credit, visa, etc. Sometimes this is to find invoices so they can craft their own lookalike, other times it’s to find lists of passwords in an Excel sheet stored in SharePoint.

Suggested control: DLP, potentially. I’m not too certain about this one.

Oauth Application Use

This is one that I’ve seen in increasing frequency, at least the second usage. This is the use of Oauth applications, either for Oauth phishing by the user consenting to a malicious app, or for persistence, mailbox exfiltration, or autosending by the threat actor consenting to the application. This is one of the activities that I dread the most, as some research I’ve done found that common applications used by threat actors could download the entire user mailbox to PST or other email format, making it essentially impossible to tell what was accessed. I’ve also recently seen application registration directly in the tenants, which Microsoft recently covered in a threat intelligence article. It’s locked behind the Security Admin Center, but shoot me at email or leave a comment and I’ll send you a copy.

Suggested controls: Application restrictions, alerting.

Email Compromise Data

I very painfully reviewed every BEC I have responded to, where the customer is still a client. This left me with exactly 69 incidents that I manually transferred into an Excel spreadsheet, and then used pivot tables to visualize.

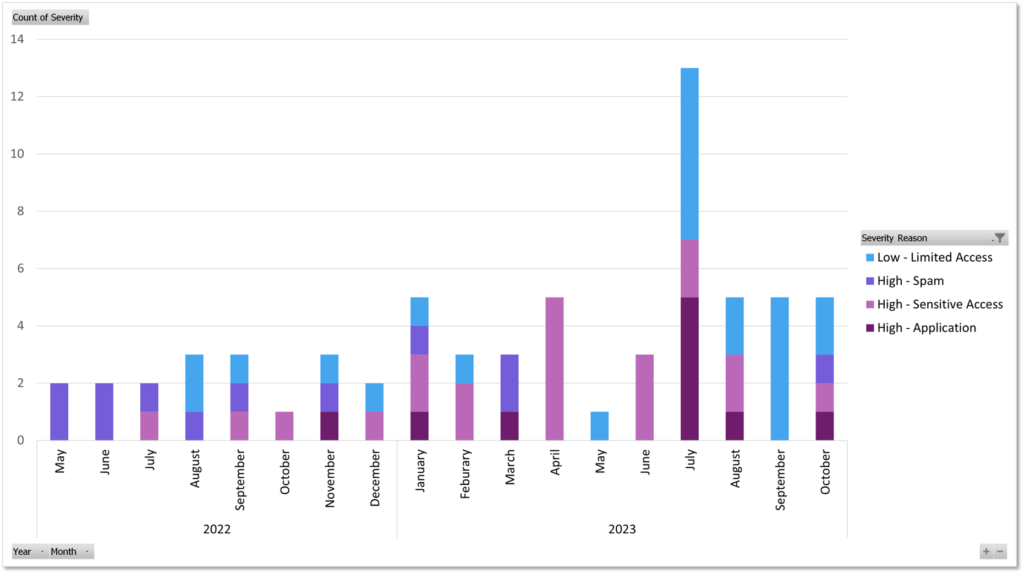

This graph shows most of the incidents that I have handled up to the middle of October as I’m missing records from former customers. You can see that at first, the most common incident was spam mass-mailing. The last time I responded to an incident with spam mass mailing was in March, until I was it again in October. From January 2023 onwards I saw a large increase in not only incidents but also incidents specifically where sensitive data was accessed. November was the first time that I saw the threat actor consent to a malicious application, which was specifically an autosender application. This activity has been increasing as well.

Finally, there is a decent number of incidents where there was very limited activity within the account after compromised. This is usually because alerting caught the incident, and I was able to remediate it before it escalated.

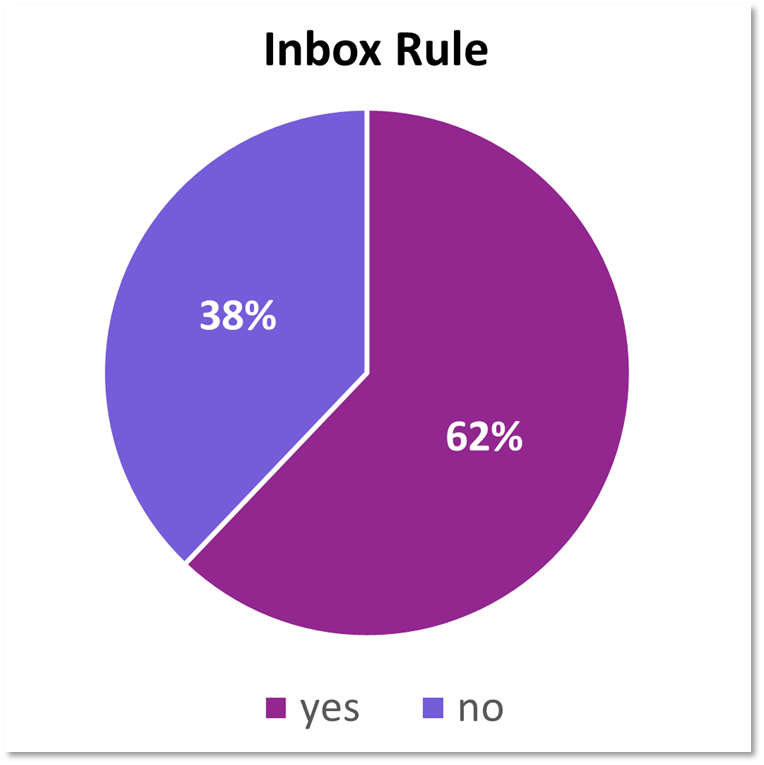

Inbox rules were created in 2/3rd of the incidents I have responded to. The rules are generally consistent, either redirecting mail to the RSS Subscriptions or Conversation History folder or deleting all incoming emails. Occasionally they will branch out and mark as read/delete emails with certain subjects. For example, I’ve seen rules where emails with the subject Microsoft, password, reset, account, scam, hacked, etc. are targeted. This is sometimes as they are resetting account credentials, and don’t want the victim to know.

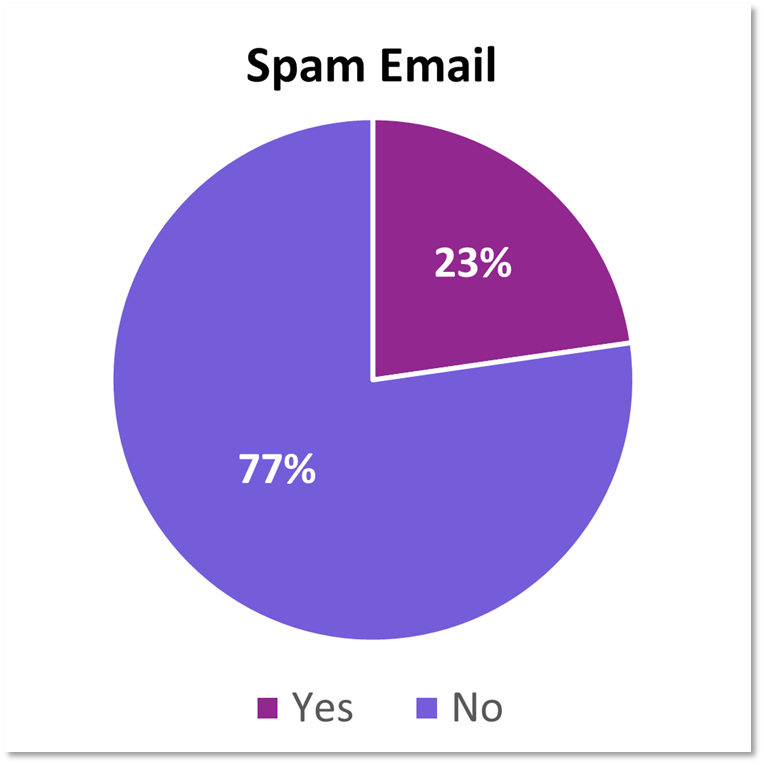

Spam emails were sent in 1/4th of the incidents, generally containing either a link to a phishing website via a fake PDF or DocuSign link, but also, I also once saw a link to a fake PFD with a redirect stored within the user’s OneNote.

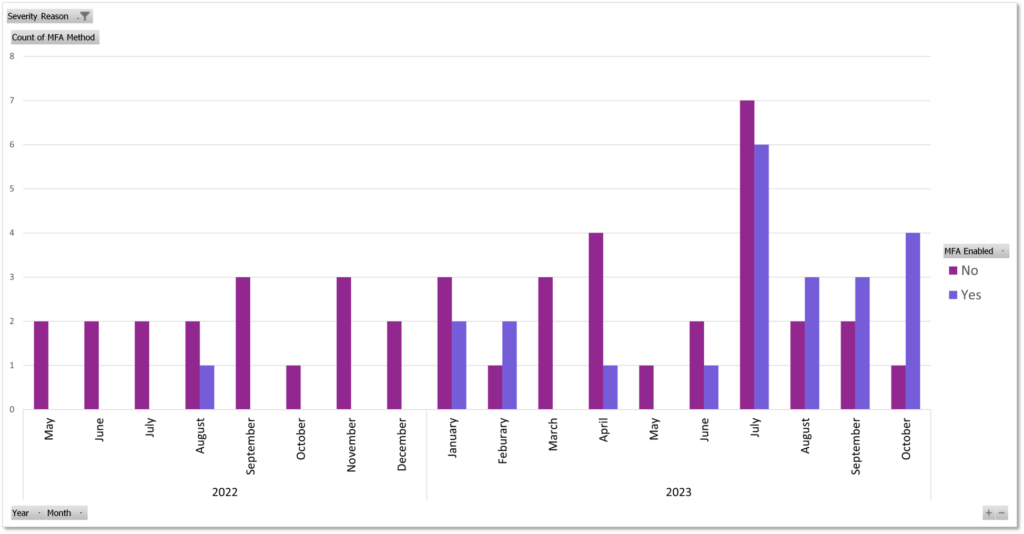

Finally, this chart shows incidents and their MFA status. As you can see, MFA being bypassed is becoming more common. You can see that July and August were the first months where more accounts with MFA were compromised, than accounts without. February does not count, as I’m missing 3 records from that month.

I have limited data on how often MFA has successfully protected accounts, but with the rise of AitM phishing kits, I’m guessing it’s less than the 99.9% of times that Microsoft still claims. If I could determine how MFA was bypassed, it was only because I could locate the phishing website that the victim visited and confirmed that malicious code was present on the website. For other incidents, such as with Company X, AitM is still my best guess.

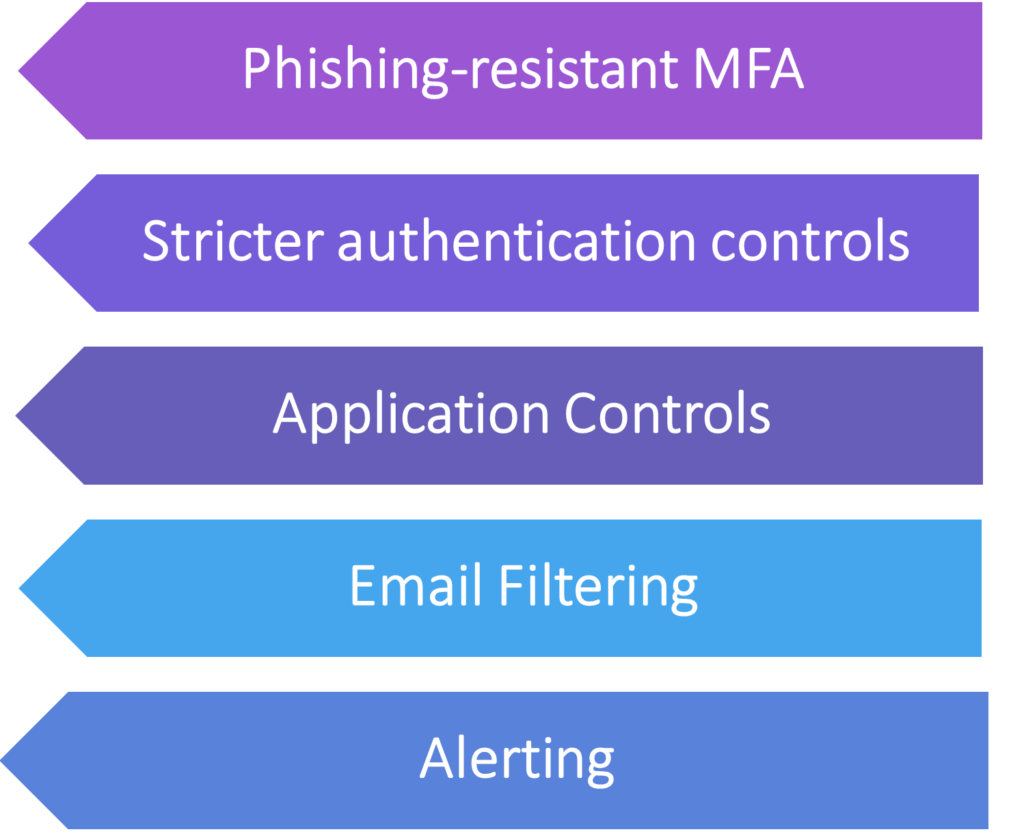

Controls

Now I’ll do a deeper dive into controls for BEC.

Technical Controls

When it comes to technical controls, one of the best ones is phishing-resistant MFA. This is MFA that is resistant to token theft or social engineering. FIDO2 hardware tokens are a great example of this, as is certificate-based authentication.

Another control I like is stricter authentication controls and requirements, which are known as conditional access in 365 and context-aware access in Google Workspace. Geofencing to the company network or restricting access to only approved and secured company devices is a great way to prevent any further access once a threat actor has gotten in the front door, so to speak.

Both Azure and Google allow you to place restrictions on third-party app access to data, which will allow you to avoid consent phishing and other malicious uses of OAUTH applications. You can restrict the permissions that the apps can have, to avoid risky permissions like mail sending, or disable user consent altogether, at least in 365.

Another control that I think is important to protect against email compromise is a good spam filter. Whether you harden the one that natively comes with your email platform, or you use a third-party one, preventing the phishing emails from even reaching the inbox already helps you reduce risk.

Finally, one of my favorite controls, and one I think is most vital behind MFA is alerting. There are options for alerting on suspicious activity within most cloud email systems, and they aren’t all exclusively for the use of enterprise organizations. There are native alerting solutions available for certain cloud email systems which may have some licensing limitations, and there are third-party alerting solutions that work for both big and small companies, solo IT or MSP. It’s likely that enabling alerting for customers in January 2023 is partly a reason that we have seen an increase in email compromise activity, admittedly. Not sponsored, but Blumira has let us abuse their free tier for longer than most companies would, and their MSP-focused 365 alerting has prevented many incidents from reaching the point where they become more severe.

Operational & Administrative

I’ll always preach this, but I think that security awareness training is a very important administrative control, provided it’s done right. No one particularly enjoys doing security awareness training, but if you can do it so your employees see it as more than just a boring thing they’re forced to do once a year, they’re going to retain the information better. Run phishing simulations regularly to test the effectiveness of your program, adapting your simulations to the phishing emails targeting your organization. AKA Threat Intelligence!

Then there’s everyone’s favorite, the policies and procedures. You should have policies for how your organization handles the storage of sensitive information, email security, and changes to financial information. If a vendor emails you asking to change the account where payments go, what are you going to do to confirm the veracity of that request, if anything? Same for employees, do you require employees to sign a document in person when they want to change their direct deposit information, or are you fine if they send accounting an email from a Gmail account?

You also want to ensure that the companies you partner with take your financial security seriously. Will your bank call you to confirm large changes such as adding a new signer? What compliance standards must they follow, and do they follow them? All of these and more are things to consider when thinking about how to protect your organization from a potentially company-ruining incident.

Why Should I Care?

Maybe some of you are thinking this, or know someone who thinks this way, or this is a strawman, but why should I care? Someone’s email gets hacked, and they send some spam, or they view a few invoices, maybe we lose some money.

Well, large company or small company, nonprofit or law firm, the fallout from a BEC could still be highly damaging to a business. Had the second wire gone through, Company X would have struggled to make payroll. If your email gets hacked and a customer pays to a fake bank account, do you think they’re going to be happy about that, especially if it was “your fault” in the first place? Do you think I feel safe about doing business with a company that had a hacked account and sent me a phishing email?

Do you think an email compromise is a privacy breach?

Well, we don’t store any personal information in our emails, so we should be fine.

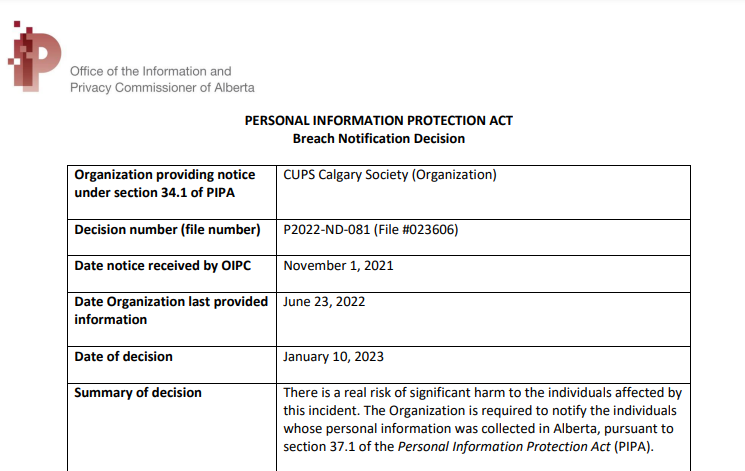

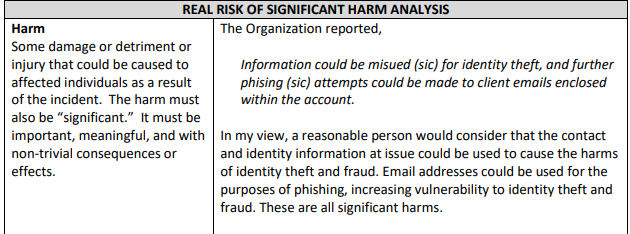

Now I’m not a lawyer, but according to the Alberta Privacy Commissioner, you might not be.

A decision from OIPC in January 2023 describes an incident where an employee of Calgary Urban Project Society was successfully phished, and their account sent out around 150 spam emails. OIPC determined that “There is a real risk of significant harm to the individuals affected by this incident” which required CUPS to do breach notification, which isn’t cheap. Sometimes, they are also required to provide credit monitoring services, which they were not in this case.

This is far from the only decision saying essentially the same thing, about the exact type of incident.

Current legislation around privacy breaches and specifically breach reporting are relatively toothless, but a new bill, which is the Canadian Privacy Protection Act, proposes stronger penalties for both negligence causing the breach, as well as failing to report the breach.

Now hopefully that’s enough to give some of you what you need to convince your boss to move to phishing-resistant MFA. 😉

OIPC Breach Notification Decisions

(If anyone from OIPC is reading this – keep up the good work. <3 you.)

Summary

I ended my talk with 4 points, which I think summarizes things pretty well.

- Email compromises are bad

- Email compromises are predictable

- Email compromises are preventable-ish

- Email compromises are serious business

BEC have become a passion of mine recently, literally 3/4 of the last posts on my blog are somehow related to it. Check out my other posts if you’re interested, which includes a BEC remediation guide, and a deep dive I did on a malicious Azure application.

I’m gonna go eat dinner now. I’ll update this in time for Calgary BSides.